| << Chapter < Page | Chapter >> Page > |

The dyadic decision trees studied here are different than classical tree rules, such as CART orC4.5. Those techniques select a tree according to

for some whereas ours was roughly

for . The square root penalty is essential for the risk bound. No such bound exists for CARTor C4.5. Moreover, recent experimental work has shown that the square root penalty often performs better in practice. Finally,recent results show that a slightly tighter bounding procedure for the estimation error can be used to show thatdyadic decision trees (with a slightly different pruning procedure) achieve a rate of

which turns out to be the minimax optimal rate (i.e., under the boundary assumptions above, no method can achieve a faster rate of convergence tothe Bayes error).

The notion of dimension of a sets arises in many aspects of mathematics, and it is particularly relevant to the study offractals (that besides some important applications make really cool t-shirts). The dimension somehow indicates how we shouldmeasure the contents of a set (length, area, volume, etc...). The box-counting dimension is a simple definition of the dimension ofa set. The main idea is to cover the set with boxes with sidelength . Let denote the smallest number of such boxes, then the box counting dimension is defined as

Although the boxes considered above do not need to be aligned on a rectangulargrid (and can in fact overlap) we can usually consider them over a grid and obtain an upper bound on the box-counting dimension. Toillustrate the main ideas let's consider a simple example, and connect it to the classification scenario considered before.

Let be a Lipschitz function, with Lipschitz constant ( i.e., ). Define the set

that is, the set is the graphic of function .

Consider a partition with squared boxes (just like the ones we used in the histograms), the points in set intersect at most boxes, with (and also the number of intersected boxes is greater than ). The sidelength of the boxes is therefore the box-counting dimension of satisfies

The result above will hold for any “normal” set that does not occupy any area. For most sets the box-counting dimension is always going to be an integer, butfor some “weird” sets (called fractal sets) it is not an integer. For example, the Koch curvehas box-counting dimension . This means that it is not quite as small as a 1-dimensional curve, but not as big as a2-dimensional set (hence occupies no area).

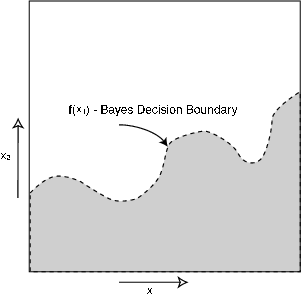

To connect these concepts to our classification scenario consider a simple example. Let and assume has the form

where is Lipschitz with Lipschitz constant . The Bayes classifier is then given by

This is depicted in [link] . Note that this is a special, restricted class of problems. That is, we areconsidering the subset of all classification problems such that the joint distribution satisfies for some function that is Lipschitz. The Bayes decision boundary is therefore given by

Has we observed before this set has box-counting dimension 1.

Notification Switch

Would you like to follow the 'Statistical learning theory' conversation and receive update notifications?