| << Chapter < Page | Chapter >> Page > |

Signal processing applications that run in "real-time" input data, process the data, and then output the data. This can be done either on a single sample basis or a block of data at a time.

A simple way to perform signal processing algorithms is to get one input sample from the A/D converter, process the sample in the DSP algorithm, then output that sample to the D/A converter. When I/O is done this way there is a lot of overhead processing to input and output that one sample. As an example, suppose we will process one sample at a time with the following equation

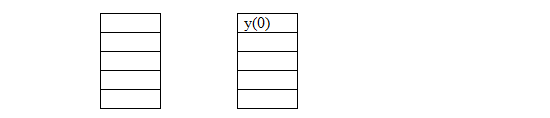

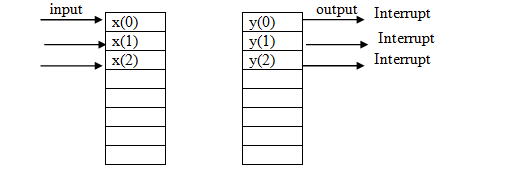

where is the input and is the output. Here is how the processing takes place. When an interrupt occurs, systems usually input a sample and output a sample at the same time. Suppose we have a value for the output, y(0), in memory before the interrupt occurs.

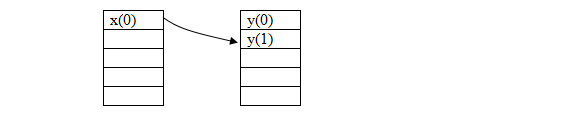

Then when the interrupt occurs, the y(0) is output and x(0) is input.

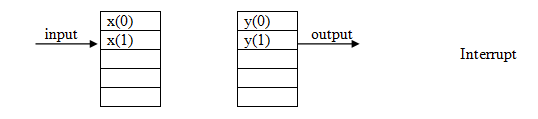

After this we can use x(0) to compute the next output. So y(1) = 2x(0).

Then another interrupt occurs and the next values are input and output.

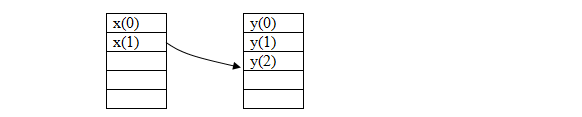

And the process repeats.

Notice that the processing of the input sample must be done before the next interrupt occurs or else we won't have any data to output. Also notice that the output is always one sample delayed from the input. This means that the current input cannot be instantly output but must wait for the next interrupt to occur to be output.

In order to minimize the amount of overhead caused by processing one sample at a time in-between each interrupt, the data can be buffered and then processed in a block of data. This is called "block processing." This is the method that will be used in this module and the Code Composer Studio (CCS) project. The benefit of block processing is it reduces overhead in the I/O processes. The drawback to block processing is that it introduces a larger delay in the output since a block of data must be input before any data is processed and then output. The length of the block determines the minimum delay between input and output.

Suppose we are processing the data with the same algorithm as above:

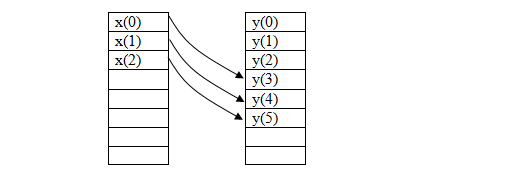

Also, suppose we are going to process the data with a block size of 3. This means we need to start with 3 values in our output buffer before we get more data.

Three interrupts occur and we get 3 new values and output the three values that are in the output buffer.

At this point we process the values that were received.

Then it starts all over again. Notice how far down x(0) is moved. This is the delay amount from the input to the output.

Notification Switch

Would you like to follow the 'Dsp lab with ti c6x dsp and c6713 dsk' conversation and receive update notifications?