| << Chapter < Page | Chapter >> Page > |

Digital computers can process discrete time signals using extremely flexible and powerful algorithms. However, most signals of interest are continuous time signals, which is how data almost always appears in nature. Now that the theory supporting methods for generating a discrete time signal from a continuous time signal through sampling and then perfectly reconstructing the original signal from its samples without error has been discussed, it will be shown how this can be applied to implement continuous time, linear time invariant systems using discrete time, linear time invariant systems. This is of key importance to many modern technologies as it allows the power of digital computing to be leveraged for processing of analog signals.

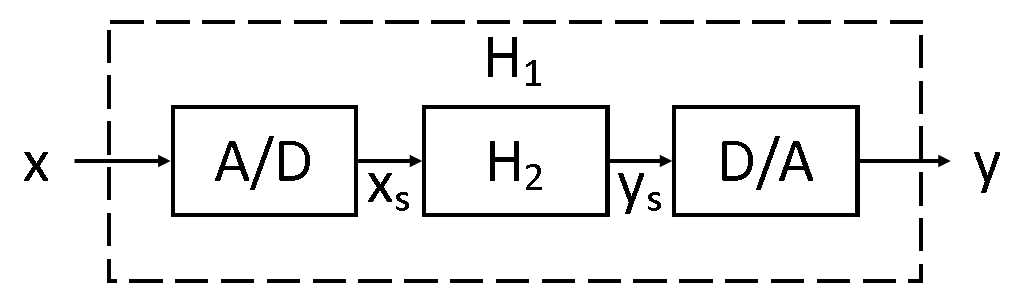

With the aim of processing continuous time signals using a discrete time system, we will now examine one of the most common structures of digital signal processing technologies. As an overview of the approach taken, the original continuous time signal is sampled to a discrete time signal in such a way that the periods of the samples spectrum is as close as possible in shape to the spectrum of . Then a discrete time, linear time invariant filter is applied, which modifies the shape of the samples spectrum but cannot increase the bandlimit of , to produce another signal . This is reconstructed with a suitable reconstruction filter to produce a continuous time output signal , thus effectively implementing some continuous time system . This process is illustrated in [link] , and the spectra are shown for a specific case in [link] .

Further discussion about each of these steps is necessary, and we will begin by discussing the analog to digital converter, often denoted by ADC or A/D. It is clear that in order to process a continuous time signal using discrete time techniques, we must sample the signal as an initial step. This is essentially the purpose of the ADC, although there are practical issues that which will be discussed later. An ADC takes a continuous time analog signal as input and produces a discrete time digital signal as output, with the ideal infinite precision case corresponding to sampling. As stated by the Nyquist-Shannon Sampling theorem, in order to retain all information about the original signal, we usually wish sample above the Nyquist frequency where the original signal is bandlimited to . When it is not possible to guarantee this condition, an anti-aliasing filter should be used.

The discrete time filter is where the intentional modifications to the signal information occur. This is commonly done in digital computer software after the signal has been sampled by a hardware ADC and before it is used by a hardware DAC to construct the output. This allows the above setup to be quite flexible in the filter that it implements. If sampling above the Nyquist frequency the. Any modifications that the discrete filter makes to this shape can be passed on to a continuous time signal assuming perfect reconstruction. Consequently, the process described will implement a continuous time, linear time invariant filter. This will be explained in more mathematical detail in the subsequent section. As usual, there are, of course, practical limitations that will be discussed later.

Notification Switch

Would you like to follow the 'Signals and systems' conversation and receive update notifications?