| << Chapter < Page | Chapter >> Page > |

Even if we aren't able to choose pivots randomly, quicksort still requires only O(n log n) time over all possible permutations of its input. Because this average is simply the sum of the times over all permutations of the input divided by n factorial, it's equivalent to choosing a random permutation of the input. When we do this, the pivot choices are essentially random, leading to an algorithm with the same running time as randomized quicksort.

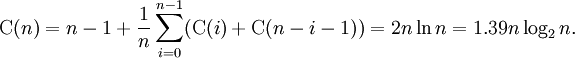

More precisely, the average number of comparisons over all permutations of the input sequence can be estimated accurately by solving the recurrence relation:

Here, n − 1 is the number of comparisons the partition uses. Since the pivot is equally likely to fall anywhere in the sorted list order, the sum is averaging over all possible splits.

This means that, on average, quicksort performs only about 39% worse than the ideal number of comparisons, which is its best case. In this sense it is closer to the best case than the worst case. This fast average runtime is another reason for quicksort's practical dominance over other sorting algorithms.

C(n) = (n-1) + C(n/2) + C(n/2)

= (n-1) + 2C(n/2)

= (n-1) + 2((n/2) - 1 + 2C(n/4))

= n + n + 4C(n/4) - 1 - 2

= n + n + n + 8C(n/8) - 1 - 2 - 4

= ...

= kn + 2^kC(n/(2^k)) - (1 + 2 + 4 + . . . . . + 2^(k-1))

where log2n>k>0

= kn + 2^kC(n/(2^k)) - 2^k + 1

->nlog2n + nC(1) - n + 1.

The space used by quicksort depends on the version used.

Quicksort has a space complexity of O(log n), even in the worst case, when it is carefully implemented such that

The version of quicksort with in-place partitioning uses only constant additional space before making any recursive call. However, if it has made O(log n) nested recursive calls, it needs to store a constant amount of information from each of them. Since the best case makes at most O(log n) nested recursive calls, it uses O(log n) space. The worst case makes O(n) nested recursive calls, and so needs O(n) space.

We are eliding a small detail here, however. If we consider sorting arbitrarily large lists, we have to keep in mind that our variables like left and right can no longer be considered to occupy constant space; it takes O(log n) bits to index into a list of n items. Because we have variables like this in every stack frame, in reality quicksort requires O(log2n) bits of space in the best and average case and O(n log n) space in the worst case. This isn't too terrible, though, since if the list contains mostly distinct elements, the list itself will also occupy O(log n) bits of space.

The not-in-place version of quicksort uses O(n) space before it even makes any recursive calls. In the best case its space is still limited to O(n), because each level of the recursion uses half as much space as the last, and

Notification Switch

Would you like to follow the 'Data structures and algorithms' conversation and receive update notifications?